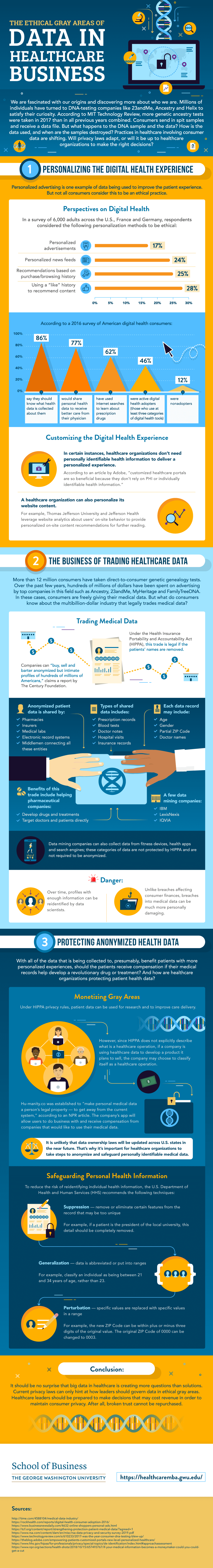

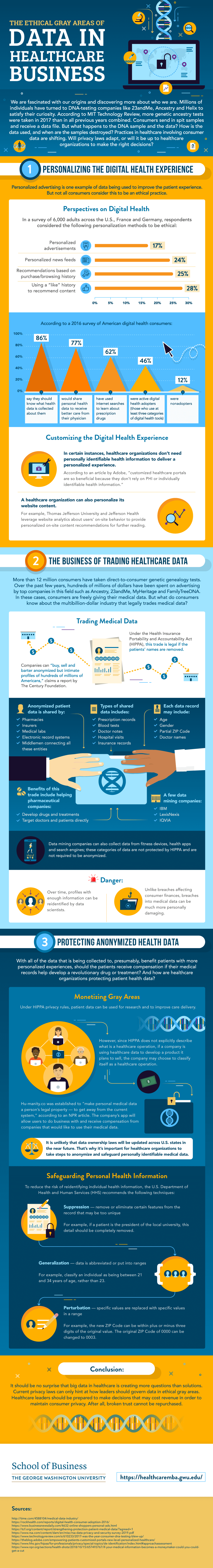

We are fascinated with our origins and discovering more about who we are. Millions of individuals have turned to DNA-testing companies like 23andMe, Ancestry and Helix to satisfy their curiosity. According to MIT Technology Review, more genetic ancestry tests were taken in 2017 than in all previous years combined. Consumers send in spit samples and receive a data file. But what happens to the DNA sample and the data? How is the data used, and when are the samples destroyed? Practices in healthcare involving consumer data are shifting. Will privacy laws adapt, or will it be up to healthcare organizations to make the right decisions?

To learn more, check out the infographic below created by the George Washington University School of Business, Online Healthcare MBA program.

<p style="clear:both;margin-bottom:20px;"><img src="https://res.cloudinary.com/dqtmwki9i/image/fetch/https://www.project-alpine.com/assets/5bacd378-7d09-4ee3-add3-59287dfdbc58" alt="How healthcare data can be anonymized, traded, potentially reidentified, and protected." style="max-width:100%;" /></p>

Personalizing the Digital Health Experience

Personalized advertising is one example of data being used to improve the patient experience. But not all consumers consider this to be an ethical practice.

Perspectives on Digital Health

In a survey of 6,000 adults across the U.S., France and Germany, 28% of respondents considered using a “like” history to recommend content to be an ethical personalization method. 25% deemed recommendations based on purchase or browsing history to be ethical, and 24% felt personalized news feeds did the same.

According to a 2016 survey of American digital health consumers, 86% of respondents said they should know what personal health data is collected. 77% of respondents stated they would share personal health data in exchange of better care from their physicians, while 62% said they had used internet searches to learn about prescription drugs.

Customizing the Digital Health Experience

In certain instances, healthcare organizations don’t need personally identifiable health information to deliver a personalized experience. According to an article by Adobe, “customized healthcare portals are so beneficial because they don’t rely on PHI or individually identifiable health information.”

A healthcare organization can also personalize its website content. For instance, Thomas Jefferson University and Jefferson Health leverage website analytics about users’ on-site behavior to provide personalized on-site content recommendations for further reading.

The Business of Trading Healthcare Data

More than 12 million consumers have taken direct-to-consumer genetic genealogy tests. Over the past few years, hundreds of millions of dollars have been spent on advertising by top companies in the field such as Ancestry, 23andMe, MyHeritage and FamilyTreeDNA. In these cases, consumers are freely giving their medical data. But what do consumers know about the multibillion-dollar industry that legally trades medical data?

Trading Medical Data

According to a report by The Century Foundation, companies can “buy, sell and barter anonymized but intimate profiles of hundreds of millions of Americans.” Under the Health Insurance Portability and Accountability Act (HIPPA), this trade is legal if the patients’ names are removed.

This anonymized patient data is shared by pharmacies, insurers, medical labs, and electronic record systems, and it’s also shared by the middlemen that bind these entities together. Some of the shared data types include prescription records, blood tests, and hospital visits, and the information may include age, gender, partial zip codes, and doctor names. There are benefits to this type of trading. For instance, sharing data can help pharmaceutical companies develop drugs and treatments that can target doctors and patients directly.

Some of the companies participating in healthcare-related data mining include IBM, LexisNexis, and IQVIA. These companies can also collect data from devices not protected by HIPPA or anonymity requirements, such as fitness devices and health apps.

There are a few dangers associated with trading medical data. For example, profiles with enough information can eventually be reidentified by data scientists. Unlike breaches affecting consumer finances, breaches into medical data can be much more personally damaging.

Protecting Anonymized Health Data

With all of the data that’s being collected to, presumably, benefit patients with more personalized experiences, should the patients receive compensation if their medical records help develop a revolutionary drug or treatment? And how are the healthcare organizations protecting patient health data?

Monetizing Gray Areas

Under HIPPA privacy rules, patient data can be used for research and to improve care delivery. However, since HIPPA doesn’t explicitly describe what is a healthcare operation, if a company’s using healthcare data to develop a product it plans to sell, the company may choose to classify itself as a healthcare operation.

For example, hu-manity.co was established to “make personal medical data a person’s legal property – to get away from the current system,” per an NPR article. The company’s app will allow users to do business with and receive compensation from companies that would like to use their medical data.

It’s unlikely that data ownership laws will be updated across U.S. states soon. That’s why it’s important for healthcare organizations to take steps to anonymize and safeguard personally identifiable medical data.

Safeguarding Personal Health Information

To lower the risk of reidentifying individual health information, the U.S. Department of Health and Human Services (HHS) recommends a few protective techniques. The first technique, suppression, removes or eliminates certain features from a record that may be too unique. The second technique, generalization, uses abbreviated data or places information into data ranges. The third technique, perturbation, replaces specific values with specific values in a range.

Conclusion

It shouldn’t be a surprise that big data in healthcare is creating more questions than solutions. Current privacy laws can only hint at how leaders should govern data in ethical gray areas. Healthcare leaders should be prepared to make decisions that may cost revenue in order to maintain consumer privacy. After all, broken trust can’t be repurchased.